A casual reader of The SSD Review has probably grown accustomed to seeing the huge RAID numbers from any number of our RAID tests as well as the great results seen in our evaluations of consumer SSDs.

We do have a penchant for throwing around numbers in excess of 450,000 IOPS and 4GB/s throughput in our reviews so, for the uninitiated, our new Enterprise Test Protocol (ETP) might bring about some confusion as we have geared the testing towards enterprise need.

Enterprise SSS (Solid State Storage) brings with it a host of different metrics that are very important to the actual deployment and usage of these flash-based devices. In this article, our goal is to explain our new Enterprise Test Protocol, reasoning for different test measurements, as well as our techniques utilized, to help understand the end result of this Protocol.

ENTERPRISE SOLID STATE STORAGE (SSS)

SSS is a term that encompasses all form factors of which we may see flash-based devices integrated. When designing our Enterprise Test Protocol, we identified that our main component had to be one in which the testing itself must have the ability to be deployed, and scale, regardless of the type of solid state storage being analyzed.

We felt the oft-used term of SSD (solid state drive) falls short as it does not cover the entire spectrum of enterprise class devices that are deployed around the world. There are a wide range of SSS form factors that spans 2.5″, 3.5″, PCIe slot devices, newer PCIe SSDs, mSATA…and the list grows daily.

Be it the latest SSD, EFD, or PCIe NAND device, in any number of configurations, or the latest RAID or HBA adapter pushed to the maximum with attached SSS, we need the results to be relevant over the wide gamut of devices that operate in the enterprise environment.

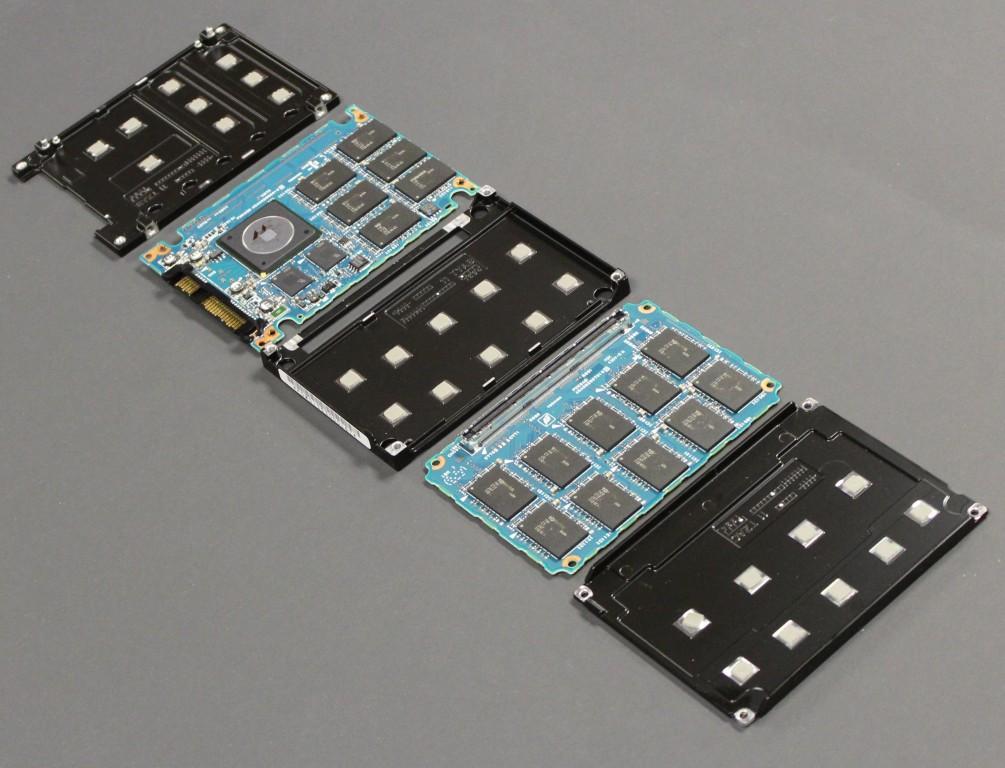

As this photo depicts, the Toshiba MK4001GRZB enterprise SSD may appear to be a ‘typical’ 2.5″ form factor SSD, however once opened, it is a very complicated SSD which contains many layers. As a whole, Enterprise SSS is an entirely different class of SSS than the consumer variants, and they are designed for a different type of usage as well.

Enterprise SSS is designed for 24/7 use, under the most demanding of circumstances and the number one enemy of any datacenter administrator is down-time. Billions of dollars are spent yearly to optimize systems and to take every conceivable step necessary to avoid downtime. Even under the worst possible circumstances, we can be assured that these data centers will be operated 24 hours a day.

PERFORMANCE VARIABILITY

Sustainability and predictability are key performance requirements. There is a tremendous difference between the performance of SSS that is ‘Fresh Out of Box’ (FOB) and the sustainable performance that can be expected after days, or even hours, of load (steady state). Steady state is reached when the device is operating under its ‘final’ level of performance, and there will be a very small amount of performance variability.

After prolonged use, all flash-based devices will start to slow down and reach steady state. The nature of NAND Flash, as a whole, leads to performance degradation over time. This loss of performance can be dealt with using a number of technologies (Garbage Collection, TRIM) that attempt to keep the SSS running at optimal performance, regardless of the amount of the time that the SSS has been used.

Unfortunately, these technologies may rely upon certain factors that aren’t always present in an enterprise environment. Most SSS is deployed into RAID arrays and behind HBAs, where the TRIM command is not yet passed. Idle time is used for a number of performance enhancing operations on consumer SSDs, and as mentioned, idle time is avoided in the enterprise at all costs.

Another key consideration is the amount of data on SSS itself and performance can be very different depending upon the amount of data contained on the device. SSS which is at 20% capacity usually handles mixed read/write workloads much better than one that is at 100%. In an enterprise scenario, the device is always full if used correctly. With SSS being a premium data tier with a much higher cost ratio than other solutions, its ability to be used at capacity is crucial.

There are also endurance-enhancing routines that run in the background incessantly. ‘Wear Leveling’ is utilized to ensure that the writes to NAND are evenly distributed. ‘Static Data Rotation’, which moves ‘stale’ data to keep the NAND wear over the device even, is a constant requirement. In the background, there is a never-ending war being waged to protect and extend the finite write cycles (P/E Ratio) that is an inherent limitation of all NAND SSS.

There are also endurance-enhancing routines that run in the background incessantly. ‘Wear Leveling’ is utilized to ensure that the writes to NAND are evenly distributed. ‘Static Data Rotation’, which moves ‘stale’ data to keep the NAND wear over the device even, is a constant requirement. In the background, there is a never-ending war being waged to protect and extend the finite write cycles (P/E Ratio) that is an inherent limitation of all NAND SSS.

Include the fact that the vast majority of enterprise workloads are mixed read/write access, which is the hardest type of access for any storage solution to manage, and you have a veritable perfect storm of worst case scenarios.

Quite simply, the cards are stacked against flash-based devices so they must be engineered accordingly. There are different techniques to manage the inevitability of these circumstances, but these too require overhead and can rob the device of performance. Data security routines such as ECC (Error Correction Code), embedded parity schemes, Encryption, and Signal Processing (in some cases) also effect performance drastically. These processing loads are why solid firmware implementation is an absolute must with Enterprise SSS.

The overriding point is that enterprise SSS is designed to operate under the worst case scenario constantly. This requires that we test under these very circumstances. Backing the SSS into a corner and measuring the real ‘Steady State’ performance is key. This brings about the question, how do we know when we are there?

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

Hi Paul,

You say ‘All tests must be ran consecutively, with no interruptions’ and I understand why as any pause would allow GC activity the opportunity to step back from the transition to steady state. How do you kick off the next test in a series in IOmeter? Are you doing this manually in the IOmeter control panel (as quickly as you can) or have you automated it in some way?

Regds, JR