ANVIL STORAGE UTILITIES PROFESSIONAL

Anvil’s Storage Utilities (ASU) are the most complete test bed available for the solid state drive today. The benchmark displays test results for, not only throughput but also, IOPS and Disk Access Times. Not only does it have a preset SSD benchmark, but also, it has included such things as endurance testing and threaded I/O read, write and mixed tests, all of which are very simple to understand and use in our benchmark testing.

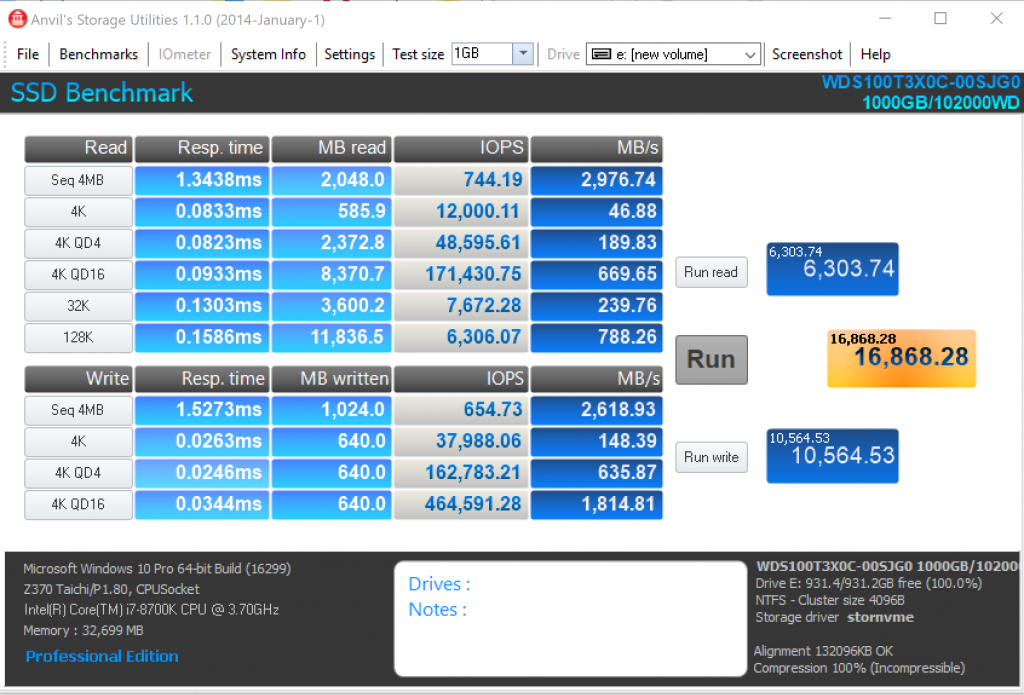

Once again, we are not overly impressed with the performance observed in Anvil, however 4KQD16 write IOPS are encouraging.

AJA VIDEO SYSTEM DISK TEST

The AJA Video Systems Disk Test is relatively new to our testing and tests the transfer speed of video files with different resolutions and Codec.

The SSD Review uses PCMark 8’s Storage test suite to create testing scenarios that might be used in the typical user experience. With 10 traces recorded from Adobe Creative Suite, Microsoft Office and a selection of popular games, it covers some of the most popular light to heavy workloads. Unlike synthetic storage tests, the PCMark 8 Storage benchmark highlights real-world performance differences between storage devices. After an initial break-in cycle and three rounds of the testing, we are given a file score and bandwidth amount. The higher the score/bandwidth, the better the drive performs.

The total Score of 5069 is decent although the average bandwidth of 547MB/s comes in much lower than many other SSDs as shown in the following chart:

TxBench is one of our newly discovered benchmarks that we works much the same as Crystal Diskmark, but with several other features. Advanced load benchmarking can be configured, as well as full drive information and data erasing via secure erase, enhanced secure erase, TRIM and overwriting. Simply click on the title for a free copy.

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

“WD speaks to SLC cache technology which we discussed in most recent reports. For the most part, this is sold as the reason for SSD performance gain, however, its use is twofold with respect to the newer 3D TLC memory as it is required to reach those performance benchmarks, unlike previous SSDs.”

I think it should be pointed out that SLC caching has existed in every SSD utilizing TLC NAND since its advent, including planar 2D TLC flash. The ADATA SU800, for example, (released in 2016) utilizes a massive dynamic cache buffer due to what was originally an immature controller (SM2258) and the Micron 32-layer 384-Gbit TLC NAND, which is very slow stuff.

In addition, SLC caching algorithms increase endurance, often substantially.

pSLC caching is one part of the reason that 3D TLC surpassed 2D MLC in endurance (substantially) and peak performance, while increasing density; very important for consumers of all types, as MLC is significantly more expensive.

You Said you were going to address the slc cache and potential problems when exceeding it… ??

In every test I see, in real world testing you always stay below that magical slc cache limit, so these drives come out of it looking very good. It’s a bit disappointing because I use a lot of different drives for different purposes and I have not found 1 single TLC drive that shows good sustained sequential speed e.g. Yet review after review, these drives are touted as “just as good” as 970 pro or similar. I guess it comes down to workflow or particular needs of the user. As an example I just bought a jms583 10Gps pcb and a “thumb” drive enclosure for it. Using a thermal pad to dissipate the heat through the aluminium enclosure, even with a Usb 3 host I can sustain ~usb3.0 limit for the whole transfer using an excellent mlc drive. With a tlc drive, the speed drops off so badly after the cache is full, that it prolongs your transfer operation by maybe 200%. Now the same would be true for situations where you generally were working with massive amounts of data.

I mean, every other professional review shows what happens when the SLC cache buffers are exhausted. The thing is, it’s not easy to do. It takes software written just to saturate 30+ queue depths at speeds greater than 3.8GB/s. The majority of consumers could never do this. Certain workstation workloads can, but those people are generally looking at MLC based SSDs.

You state that operations can be prolonged by 200%. 200% of what, exactly?