WRITE TESTING

Because of the nature of solid state storage, once the drive gets filled, write speed usually takes a precipitous plunge. We can take a fresh drive and write 4K randoms to the entire drive for 300 minutes to see what happens.

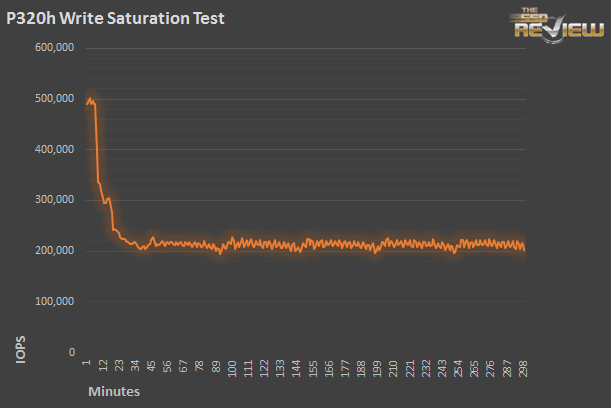

First, we write to the P320h with 4K random writes at total outstanding IO count of 256 over 300 minutes. The drive has been freshly purged, with no other preconditioning.

The P320h starts out strong, coming in right around the 500,000 IOPS mark. However, at that speed, it doesn’t take long to start filling up the drive. As that starts happening, the IOPS start dropping quickly, eventually settling right around the 212K mark, or over 850MB/s sustained. The Micron could conceivably do that for the rest of it’s life, until somewhere around 50 Petabytes of endurance… an extraordinary amount of endurance by any measure.

If we add latency to the mix, the relationship between it and IOPS becomes more apparent. Notice that the yellow and orange lines above look rather similar. As the drive fills and IOPS drop, latency increases. In the case of the P320h, it’s right around 600us average.

Why do latency and IOPS show this kind of inverse relationship? When the drive is empty, there are tons of free blocks to write to, meaning writes can be accomplished quickly. But once there are no free blocks, the drive gets locked in a struggle to free up dirty blocks and make them available again. This requires several steps, and causes transactions to be delayed.

Lastly, what happens when the P320h gets over-provisioned by 100GB? As you can see in the chart above (represented by the blue line), you get almost 50% sustained IOPS. Though the original and over-provisioned runs start at the same place, they both end up somewhere quite different. With more space to maintain blocks, the drive can keep more of it’s fresh out of box performance. By giving up 15% of the 650GB of total available capacity, a 50% increase in steady state IOPS can be had. That’s a great trade off if the extra speed is needed more than the 100GB of space.

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

Micron doesnt own the controller. It is made by IDT.

We are aware of that, thanks. Our reasoning behind wording as such is because this is, by no means, a simple stock implementation of a controller and similar could not have been accomplished without Micron’s engineering expertise and software. Great point and perhaps we could reword things just a bit…

Micron has a Minneapolis-based controller team which did much of the work on the controller. Basically, IDT has a stock PCIe controller, but it’s easily modified for custom jobs. Micron refined the design for the P320h. IDT now has a reference NVMe design, but the NVMe standard is far from universal yet. One day, a PCIe SSD won’t need a special driver, but today they do.

Micron developed and owns the chip, IDT just fabs it.

Incorrect. This is the very same controller that is used with the new NVMe controllers that IDT has developed.

Just to help you out, this is what has been posted at Anands after they inadvertently stated it was NVMe.:

Update: Micron tells us that the P320h doesn’t

support NVMe, we are digging to understand how Micron’s controller

differs from the NVMe IDT controller with a similar part number.

Our interpretation of the chip appears to be correct as it is written and this same ‘structure’ has been used in the SSD industry prior. This is not a simple plug and play adaption of a chip, but rather, custom package.

Thanks again.

Yes, it isnt NVMe, but it is an IDT chip, therefore it is not developed in house by Micron.

old news

Just needs a few heat sinks and a fan or maybe a water block to keep it cooler.

Todd – What makes you think you know so much about this chip?

Is the RAIN implementation safe enough to use without RAID 1 running outside of it (say across 2 350GB cards) it sounds good, but if you have a firmware or controller related failure you’re still at risk right?

Is this bootable? And just for kicks, what would the as-ssd results be?