MEASURING PERFORMANCE

As with all of our tests, the following tests were performed after a secure erase of the drive. The drive was also conditioned with a predefined workload until it reached steady state. We also test across the entire span of the drive.

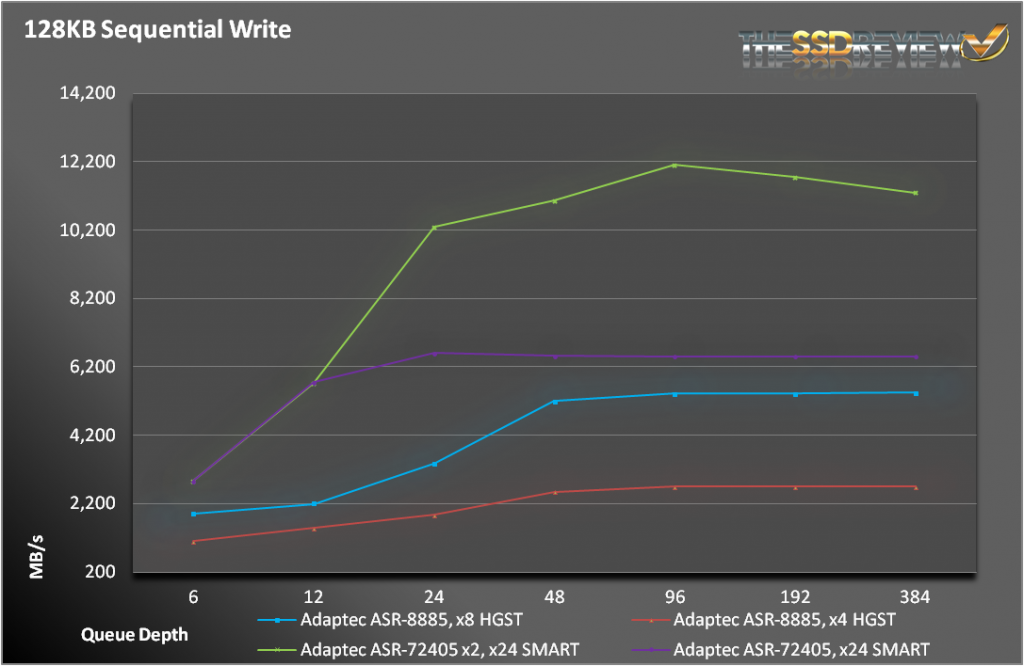

We took our ASR-8885 and paired it with both 4 and 8 SSD800MM’s from HGST. We then compared it to the ASR-72405 with 24 SMART Optimus SSDs and then to a pair of ASR-72405s with the same SMART SSDs, split evenly. It should be noted that with the Series 7, the performance topped out before 16 drives. Even though we did not have 16 identical SSDs on hand, we believe that the Series 8 would be nearly even on most tests, based on specifications. So, we wanted to know what would perform better, a bunch of 6Gbps SSDs or a handful of 12Gbps SSDs. Lets find out.

For sequential writes, eight 12Gbps SSDs were just not enough to match the parallelism and raw power of 24 SMART Optimus SSDs. Even at nearly 700MB/s, the eight SSD800MMs were not quite enough to saturate the ASR-8885. We would have needed at least one or two more to clear 6GB/s.

Read operations didn’t have nearly as many problems reaching 6GB/s. In this test, the 8 HGST SSDs held their own against the 24 SMART SSDs. The Series 8 solution trailed at lower queue depths mainly because there was only 1/3 of the drives to spread the IOs across. At higher queue depths, there was only a 200MB/s difference.

Random operations is where the superiority of the 12Gb solutions shows itself. In 4K writes, the Series 8 was 35% faster than the loaded Series 7. It was still no match to a pair of ASR-72405s, but 500K IOPS is still incredibly impressive.

Even more impressive than 500K write IOPS is the 700K read IOPS that we got out of our Series 8 system. A single ASR-8885 with eight SSD800MMs beat out a pair of ASR-72405s with 24 SMART Optimus SSDs at 4 out of 7 queue depths. Even though the Series 8 fell a few thousand IOPS short of taking the crown for most IOPS, it lived up to all expectations.

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

Wow.

I didn’t see the ASR-8885 as being listed, via the Amazon link provided. Is it available yet? If so, who besides Amazon would carry it? And likewise for the HGST SSD800MM?

We commonly review products before such availability and I might keep my eyes open on the Amazon links for the card. As for the HGST 12Gbps SAS SSDs, I somehow don’t think we are going to be seeing those available through retail means anytime soon, keeping in mind thjat they are still a new and very hard to come by item.

Thanks ahead though for using our links!

The “wow” for the performance numbers. Again, wow 8) and thanks for the informative review. Would like to buy, but am going to have to save pennies for awhile.

You wrote:

“… Finally, you can see the SFF-8643 internal connectors and SFF-8644

external connectors. With both external and internal ports, the

ASR-8885 has a lot of flexibility….”

Ok, so for example, (8)Internal / (8)External , so does this “flexibility” mean, I can simply connect 16 Drives “Internally” to the Raid card, and for example utilize all 16 in a Raid-10 ???

If you want to connect only internal drives using the 8885 then you would want to use a backplane with expanders, as physically, only 8 directly cabled drives can be connected to the internal ports on this model. However if you had an externla drive enclosure attached to this model, then you could use the external porsts in combination with the internal.

With the use of an expander based enclosure, you would be able to see all the drives in the enclosure through one connection (single point to point cable from controller to enclosure).

You could also use a 16 port internal controller such as the 81605ZQ, which does offer 16 internal ports. With either controller you would be able to create arrays using all available drives, whether in a single array or divided among different arrays or using the same drives for multiple arrays (up to 4 arrays can be created with the same set of drives.

Adaptec by PMC

Thank you! Always great to have the manufacturer jump in to elaborate!

Hi

Do you know if the new series 8 follows the same nature as previous one series 6 and 7, I mean, they seem to shine at high queue depths, but can you tell me if the made progress on random 4K QD1? Thank you

Hi Ricardo,

Adaptec has been working on improving lower queue depth performance and the up-comming release of firmware expected at the end of 2013/beginning of 2014 should reflect these changes in series 7 and 8 performance numbers. However, even when these changes are implemented, queue depth 1 performance is

expected to be low compared to more common IO depth performance applications.

Especially since at IO depth 1, the full latency of every stage in the IO path is exposed – from application, driver, command thread, drive and all the way back. At all times, only one stage is active. All others have to wait, because there is only one IO.

Thank you for the answer Adaptec.

I’ve been using Adaptec 6405 with ZMM module connecting a pair of samsung 830 ssd specifically on windows environment. I learned that Dynamic mode is the best suited for windows environment (every type of sequencial and random nature). Ok I know the benchmarks (wherever they are) don’t tell the true story and my “slow” 6405 is really fast scanning a single seagate barracuda 7200.10 at 920mb/s (all cache enabled when protected by zmm) and drives cache itself). I asked before about random 4k QD1 because each manufacturer do their controllers specifically for one environment,for example: Areca for random(read ahead and write back everything), LSI for random too, 3ware for sequencial, Atto and Adaptec for sequencial. Well booting windows from the controller,well is espected to see some nice random results and, in my opinion, would be great to see the Adaptec cache works effectively on random patterns.

Another question: why the ZMM module has 4GB SLC since it is suppose to support only 512mb? Does the module other actions like helping actively on reads and writes? (Excluding the task writen by the cache and flushing to it) in case of power failure)

It does not help with read/writes. It is just a replacement for a BBU. BBU has problems with maintaining voltage, in some cases you will have to empty it to gauge how much power it has, or to increase how it’s life expectancy. That is the downside to this, it cannot keep power in check for a too long of period of time. And you will have to empty the BBU and recharge it again. That means in the mean time of a few hours it will use passthrough instead of backed. In most cases it is not tolerateble to have it like that. Since some server has high loads and things might become a bottleneck when it suddenly shut’s off.

Are throughput test done in RAID 5 mode?

Unfortunately all I gained from this article was that you are happy Adaptec has raid controllers. If those charts included a performance comparison to a 9271 or even a 9260 I’d have some idea how an LSI benchmarks are in relation to the new adaptec. Just because it is 12G or PCI 3.0 that doesn’t mean it is faster, just that it has access to more bandwidth however the processor may be so slow it gains nothing.

Dear all, is there Host based adopter (HBA) that is compatible with ubuntu operating system.

Fantastic article! I am running a small post production shop. Our network runs on 10Gbe and as I am adding editors our current RAID (a Promise R8 connected via thunderbolt to an iMac server) is starting to become a bottle neck.

I’m currently considering building a Windows 2012 R2 server with one of these Adaptec cards to run either an 8 drive or 16 drive RAID-5. Obviously we can not afford the SSD drives tested here so I am wondering if it would be possible to run an SSD raid based on costumer SSD drives. The top of the line SSD’s from companies such as Sandisk currently achieve 400MB/s with 1TB capacity.

So an 8 bay RAID 5 configuration would give us 7TB of space and run at 2.8GB/s. An 16 bay RAID 5 would give us 15 TB of space and throughput of 6.0GB/s. Are my assumptions here correct?

Would it even be possible to use consumer grade SSDs in a server configuration or will they just die after 3 months use?

Would love to get your thoughts!