MEASURING PERFORMANCE

As with all of our tests, the following tests were performed after a secure erase of the drives. The drives were also conditioned with a predefined workload until they reached steady state. We also test across the entire span of the drives.

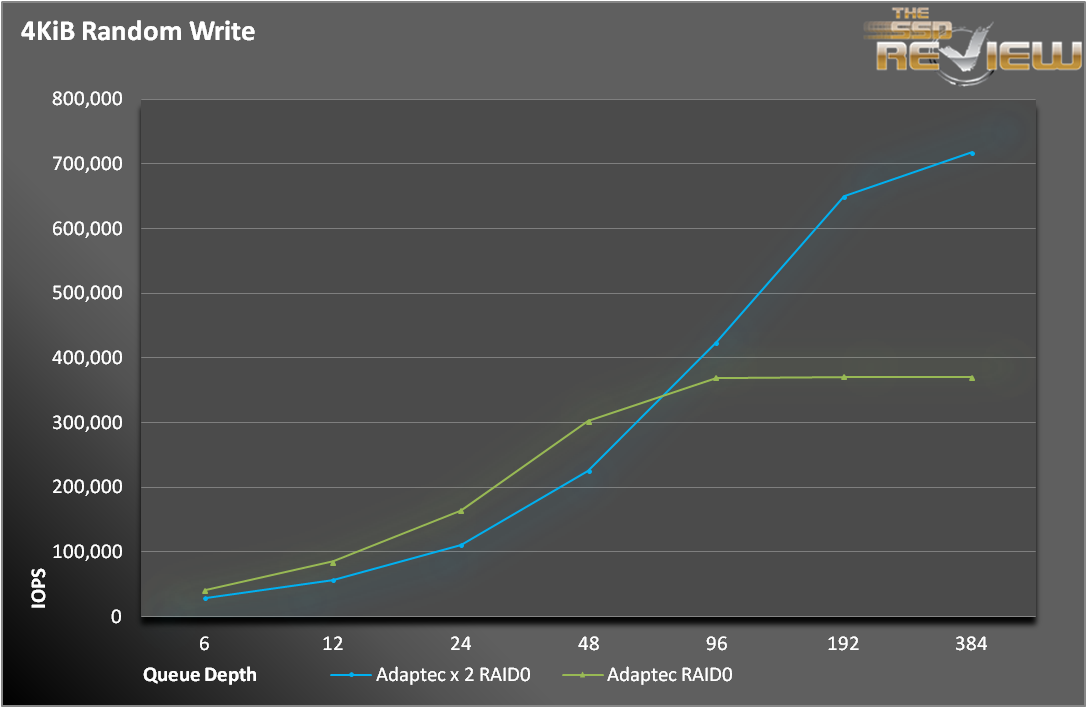

Random 4KiB performance on the ASR-72405 is great. We consistently hit 370,000 IOPS on both read and write operations. When we doubled up on the ASR-72405s, we had no problem clearing 700,000 IOPS. What was interesting was that if your queue depth was below 96, there wasn’t a dramatic difference between one and two ASR-72405s. If fact, for 4KiB random writes, the single ASR-72405 actually bested the 2x setup.

Sequential performance is really where the ASR-72405 shines. We observed over 6600MB/s in 1MB sequential writes and 6400MB/s in sequential reads. In RAID 5, sequential writes were well above Adaptec’s numbers at 3GB/s, while sequential reads matched the RAID 0 performance. The ASR-72405 needs a little bit larger queue depth for read operations in order to hit maximum speed.

So what happens when we used two ASR-72405s? Why, we doubled our performance, of course. We actually hit 12GB/s for sequential writes and nearly 11GB/s for sequential reads. That is an amazing number. That means, between the two ASR-72405s, each SSD was writing at its maximum speed. Now, in order to hit those numbers, we had to throw an extremely large number of outstanding IOs at the ASR-72405s, but that is more normal in enterprise installations.

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

In many published reports a single optimus cannot provide latency performance within that tight of a range. These are obviously system cache results.

All caching was disabled, except for any write coalescing that the ROC was doing behind the scenes. You have to remember that the SSDs were not the bottleneck on the latency measurements, in fact, they were only going at 40% of their specified rates. Also, every test we have performed, and other sites as well, show the Optimus to be a very stable SSD. So, to your point, there is some amount of caching happening outside of the DRAM, but it very limited.

Any chance of reviewing the 71605Q, see how it stands up with 1 or 2TB worth of SSD cache and a much larger spinning array? Since it comes with the ZMCP (Adaptec’s version of BBU) you can even try it with write caching on.

I’d especially love to see maxCache 3.0 go head to head with LSI CacheCade Pro 2.0

Considering I actually already own the 71605Q, and bought practically “sight unseen” as there are still no reviews available it is nice to see that the numbers on the other cards in the line are living up to their claims.

Like I said in the review, I wish we had the time and resources to test out all combination, but we can’t get them all. I have both the 8 and 24-port versions and, yes, they always hit or exceed their published specifications. I agree, that would be a great head-to-head matchup, We have a lot of great RAID stories coming up, maybe we can fit it in. Thanks for the feedback!

Yeah after I posted that I started brainstorming all the possible valid combinations you could test with those two cards and there’s quite a few permutations… Also might not be too fair to the older LSI solution but it’s what they have available and I don’t know of any release schedule for CC 3.0 or next gen cards, so might not hurt to wait for those.

I guess the best case to test would be best case cache worst case spinners, so RAID-10/1E SSDs with RAID-6 HDDs. See how the two solutions do at overcoming some of the RAID-6 drawbacks esp the write penalty.

I’m guessing the results would probably be fairly similar to the LSI Nytro review but still would be interesting to see how up to 2TB of SMART Optimus would do with a 20TB array.

Nice! How did you manage to connect the two cards (X2)?

“..We were able to procure a second ASR-72405 and split the drives evenly across the two…”

See my comment

I’m assuming they made a stripe on each raid card and then did a software raid 0 stripe of the arrays into one volume. That would be why the processors showed 50% until under load.

Great review though that SSD has me worried for sustained enterprise usage.