CONCLUSION

At the end of the day, we need to illustrate a very important point. While the LSI 9265-8i is the fastest RAID card on the planet, it simply cannot give these drives the true justice that they deserve. The throughput of the Patriots, both in MB/s and IOPS, if they could scale perfectly in RAID would be phenomenal. 4.440 GB/s would be the highest attainable throughput!

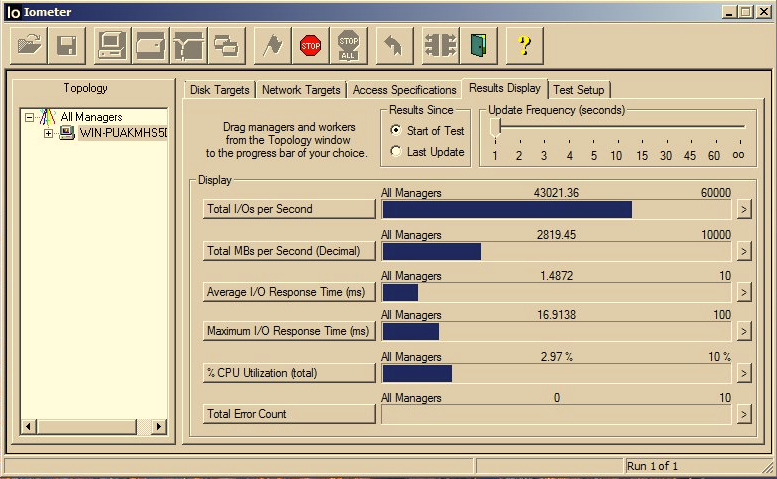

As seen in this result with Iometer testing the maximum speed (128KB @ QD 128), we did attain the highest speed of any drives that we have tested on the 9265, a whopping 2.8 GB/s! This is the limitation of the RAID card, and not the drives.

Bear in mind that that there is still plenty of speed there that the Wildfires can give that simply cannot be utilized as of yet. The LSI card that we used simply cannot go that fast. Right now there is not a controller that can handle the sheer power of the Patriot Wildfires in this array. As next generation RAID controllers start to appear that are capable of handling the throughput of these types of drives, we hope to be there on the cutting edge to witness the unseen levels of performance that this would bring about!

We would like to thank Patriot Memory for the use of their WildFire SSDs in this, and the Areca Expander review that they were used in. We went out looking for the absolute fastest drives for our Test Bench and Patriot delivered. One of the most amazing qualities of the Patriots are the phenomenal latnecy that we experienced. You simply cannot trump excellent latency, regardless of the metric that you use for comparison.

Bandwidth is great, but latency is forever!

The SandForce controller combined with the Wildfire firmware just gave us some truly superb results. A big problem that many reviewers and testers run into with SandForce controlled drives is that it is difficult to show the true performance in benchmarks when there are different levels of compressibility of the data. Sometimes the results just aren’t indicative of that BAM! acceleration that you feel when you actually use the device. There is no method to truly measure the ‘snappiness’ factor that a user will feel when they use the drive, other than looking to the true latency of the device itself.

The excellent latency points to a truly astounding base latency for these devices and that isn’t always clear when testing with on board solutions. They simply add a small amount of overhead that masks the true base latency of any SSD. The key takeaway for base latency is that is a measurement of the true capability of the device.

A great show from Patriot, as this array was able to best every other storage solution we have tested to date!

NEXT: Introduction

~ Introduction ~ Test Bench And Protocol ~

~ Anvil Storage Utilities and ATTO ~ HDTune Pro and AIDA ~

~ WEI and AS SSD ~ PCMark Vantage Total Points ~

~ Conclusion ~

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

Thanks for the awesome review!

I love playing these “what-if†dream scenarios in my head . . .

If money was not an object and you could actually afford to spend ~ $3,200.00 for 960GB of SATA III SSD awesomeness that would be second to nothing else out there (for now) . . . which one would it be? Hum . . . decisions, decisions . . . what would you do?

$3,141.89 for the LSI 9265-8i + eight (8) Patriot Wildfire 120GB SATA III’s?

https://imageshack.us/photo/my-images/827/lsi8patriotssds.jpg/

OR

$3,299.99 for the OCZ RevoDrive 3 X2 960GB PCIe card?

https://imageshack.us/photo/my-images/6/oczrevodrive3x2960gb.jpg/

Based on the reviews, it seems that you’re going to get better overall bandwidth and better read/write IOPS with the LSI + Patriot solution, yes? And for ~ $100 less?

However, that OCZ RevoDrive gives you a rated MTBF of 1,000,000 hours (that’s a lifetime!) and the Patriots are (for whatever reason) not-rated in this regard, not even at the PatriotMemory Web Site? You just get a standard 3 year Product Warranty. Is that a possible issue?

Well, the form factor of the Revo makes it attractive. However, it isnt upgradeable over the long run. The 9265 solution would enable you to switch SSDs in the ‘future’ to faster more powerful solutions. The Wildfires will be plenty durable. MTBF when it comes to SSDs isnt really a number you can hang your hat on imo. That is the failure of the actual parts, but not the longevity of the NAND onboard. I believe that is why Patriot isnt listing MTBF, it is somewhat of a misnomer when it comes to SSDs.

I wouldnt hesitate to use either solution, but would prefer the 9265 as it would give you greater flexibility, with expanders or JBODs you could even hook up to 128 devices, and that could include HDD arrays, etc. Also, you can use parity raidsets (RAID5, 6, etc) you also can use cachecade and other addons. the 9265 is more of a well rounded solution imo.

Yeah, tend to agree with that — and the MTBF rating is really a moot issue with SSD’s. Now, comes the really hard part . . . the $3,200 price tag! YIKES. That’s a Yugo.

Is no trim function in raid an issue?

That is a loaded question, but the short answer is the difference is minute in actual application.

All SSD drives will perform differently depending upon how full they are, and running with no TRIM, in effect, makes the drive appear to be at a higher fill rate at the device level.

However, you have to take into account that the difference in performance between a drive that is 100 percent full and 50 percent full is a small percentage (10-15 percent max).

since no one uses a ‘blank’ ssd you have to compare the levels of fill performance to the performance one could expect from each RAID member drive.

and these worst-case scenarios are short in duration, until the SSD does GC (garbage collection). Really the drive can never be 100 percent full as there is a dedicated hardware over-provisioning. (intentional spare area for the SSD usage).

So you can also add extra over provisioning to combat any ill-effects of not using trim.

guess that turned into a bit of a long answer 🙂

So how fast would windows boot with this setup? I didn’t see you test that… I doubt that’s testable, but either way I’d love to know.

Like 1 second?

SITE RESPONSE: Instant on isn’t a reality just yet. I have had Windows boot in as fast as 8 seconds none the less from push of the button until the screen is ready to go. On average though, my system starts around 14 seconds from press of the button.

Boot time for an array of this nature will be a bit longer actually, as you wait for the controller itself to boot. the controller has a bios of its own, which takes time to boot. this is a necessary evil, however, as it allows the card to work ‘independent’ of the OS or host machine. (RAID card initialization is about 10 seconds)

After the boot of the card bios, boot times are usually in the 9 to 10 second range for this. The inherent underlying issue that is keeping us from 1 second boot time, which this setup is more than capable of, is the coding of the underlying operating system. NTFS is the file system that is used for the Windows operating system. It unfortunately is built primarily around optimizing itself for spinning disks, not flash media. Also, the windows operating system itself is not optimized for SSDs and their tremendous latency, often using single threaded I/O requests, and unexplained wait times, where the storage system is actually idle during loading times. Many times this is simply poor coding, but there are also buffers and cache layers involved that muddy the water quite a bit.

What is needed is either another file system to be implemented, or more likely, the operating system to be coded to truly optimize for an SSD (or uber raid) throughput/latency. For instance, some game manufacturers are coding an “SSD detection” algorithm into the code of the game. When an SSD is detected, the game will request information from the SSD in a much different manner, being multithreaded and not utilizing buffers and filler textures nearly as much as they currently do. the result will be games and levels that load in roughly a second, vs the current standard of taking over a minute for a level to load!

4KB-writes don’t improve much on RAID-0, comparing to a single Wildfire 120GB performance review. That is 30.85/72.82 MB/sec. of 4KB-read/write in RAID-0 versus 16.80/58.05 MB/sec. of 4KB-read/write of a single Wildfire 120GB (AS SSD results). As found on other sites, Vertex 3 120GB shows 20.00/72.41 MB/sec. of 4KB-read/write in AS SSD – close to RAID performance.

Anyway, this review will be useful for server-builders mostly, and entertaining for us.

The thing is when you are writing to, or reading from, and array of SSDs at a 4K QD of 1, you are only accessing 1 disk per request. So think of it as this: you are only requesting 1 individual 4K file, repeatedly. This request will be answered by one device only. These results were obtained using write-through, which does not leverage write caching.

If the QD were higher, say 2 or 4 or 16, then those would be requests that are issued simultaneously, and thus answered buy a number of disks.

The best that any storage solution, be it onboard or hardware RAID, can do is simply not add latency to the request of QD1 4k. All methods of connections have their overhead, be it sata, IDE, etc…The 9265 has very great latency that is not hampered by as much overhead, as it is shooting across the PCIe bus, and they have finely tuned Firmware. Most of your loss of performance in 4kQD1 is merely firmware and bus overhead. The 9265 is a superb device here, and this is very telling that the results we see at QD1 are so high. This is a huge part of the reasoning behind the conclusion remarks about latency. The better the latency of the host, the better that the underlying device itself can respond.

This is a fun review, but it also has much to be gained even from casual users. This can be informative about the gains to be had from different types of solutions. I know many guys with 4-6 and sometimes 8 SSDs on a host of different RAID cards, and they are constantly looking for that next level of performance. Especially in the video editing and photography realms, which tbh many casual users are getting there with the abundance of HD video for us ‘normal’ people these days!

Also, the next spec of SATA will include a direct connection to the PCIe bus for SSD/HDD devices. TRULY very exciting for this storage nerd, but it will allow for all of us to see the true base latency of these devices on the market, and that is where testing like this will be great for future reference as well.

Look for that coming to a motherboard near you by this time next year!

Hi Paul

Quite broaden answer from you.

This next spec of SATA is known on the web as SATA Express. If I got you right, connection of 8 these Wildfires straight to PCI-e bus would have shown, presumably, better performance due to less overhead that PCI-e controller causes in comparison to SATA3 controller.

What is known is that SATA Express allows for 8 and 16Gbps bandwidth. Basing on this, we would see higher sequential read/writes definitely.

As for me, I intend to replace that bulky PC in favour of notebook at last. That is why I will be rather looking for the next AMD APUs on the market.

We are left only to survive till the time when SATA Express motherboard’s are out. I wish that advancements in computing technologies would be occurring faster from that that it takes…This is a no way to wait for years to witness the next CPU and so on..

Thank you. Great information. This rig you setup is excellent. I would love to run a RAID 10 SSD setup. I would love even more if I could dream up a dual controller setup so I could have redundant drives, and a redundant controller/HBA’s. In the enterprise world I setup linux mpp multipathing to dual controllers. If a disk fails, raid 10 has my back, if a controller fails, mpp has my back.

Nice article! Actually, after spending so much on the hardware, you could invest another $200 to get the optional LSI FastPath(tm) Feature key. Then, and only then, the LSI HBA will show its full potential with SSDs. Check this out: https://www.lsi.com/downloads/Public/RAID%20Controllers/RAID%20Controllers%20Common%20Files/MR-SAS_9265-9285_Performance_Brief_022511.pdf