UNDERSTANDING THE ‘TIERED’ APPROACH

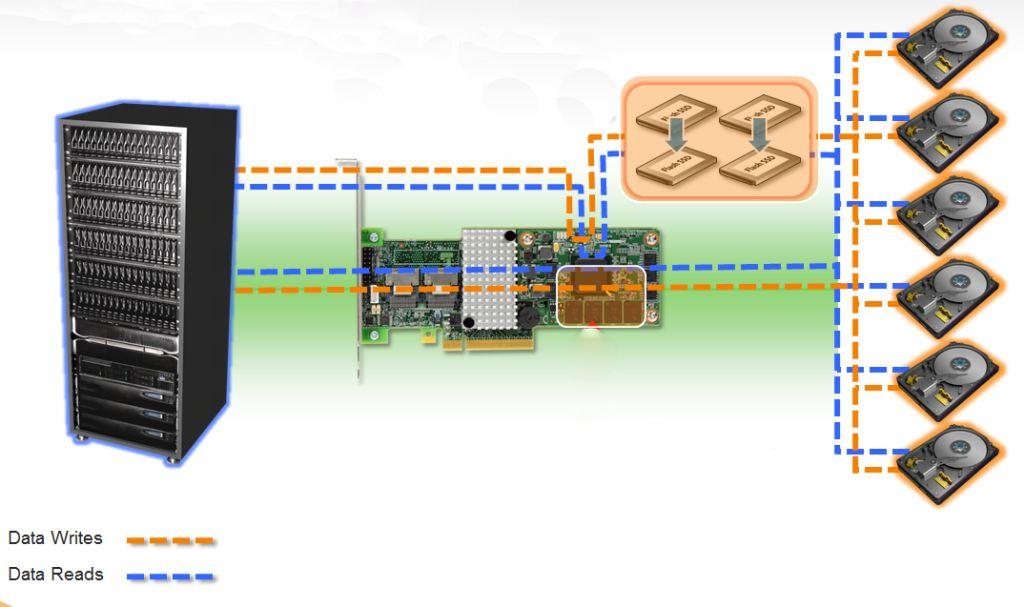

The CacheCade Pro system separates the volumes into three distinct layers. Commands are issued from the host system which, in this illustration, is to the left. From there the data will travel to the RAID controller and, in a normal system, right to the HDD array.

With the implementation of this system, there is a separate array that is connected which is comprised of SSDs. This layer is the “SSD Caching Virtual Drive” (SCVD), illustrated in the graphic below by the devices in the orange shaded area above the controller. To the right of them is the Base Volume of HDDs. New in this release is that the base volume can be any configuration that the user would wish to use. There are no specific raid sets or settings that have to be used in conjunction with the software.

Also illustrated in orange is the DRAM that is the on-board the controller itself. There are three layers, DRAM, SSD and HDD. For the purposes of speed and thus desirability, they are also categorized in that order. The idea is to utilize all three layers to the maximum benefit of the host system in serving the I/O requests. The DRAM and SSD caching will work independently, yet collectively, to optimize performance and reduce base HDD volume operations.

UNDERSTANDING “HOT” APPLICATION DATA

The key to this technology is understanding what to cache and when. Automation is extremely important, as constantly monitoring and adjusting the cached data manually would be far too time consuming and involve human interaction. LSI has come up with a software algorithm that detects which application data is most commonly accessed. The data isnt treated as individual files but as data hot spots. The most frequently accessed data will be tagged as Hot data and copied to the flash layer of storage (SVCD). This also helps with the longevity of the SSD by moving only the hot application data to the SSDs, eliminating unnecessary writing. When that Hot data is accessed it will be read from, or written to, the SSD to increase the performance. As you can see from the graphic below, the typical database application I/O profile shows read and write IO activity concentrated in specific disk volume regions. These are the high use regions that are prime candidates for SSD caching.

The data will remain on the SSD until it is decided that the data needs to be retired. Retaining or retiring cached data is determined by data “popularity” statistics that are continuously maintained for all cached read and write data.

The data will remain on the SSD until it is decided that the data needs to be retired. Retaining or retiring cached data is determined by data “popularity” statistics that are continuously maintained for all cached read and write data.

The effectiveness of the caching is going to increase over time as the algorithms more accurately gauge the data that needs to be cached, and the cache is populated with the data. It really doesnt matter which process is requesting the information, so multiple applications accessing one or more base volumes can benefit from the caching simultaneously.

NEXT: Enter Write Caching

~ Introduction ~ Basic Concepts and Application ~

~ Enter Write Caching ~ Exploring TCO ~ Test Bench and Protocol ~

~ Single Zone Results ~ Overlapped Region Results ~

~ Real World Results and Conclusion ~

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |