REPORT ANALYSIS AND FINAL THOUGHTS

The HighPoint SSD7101A-1 NVMe RAID Controller provides a very interesting perspective into the world of high performance single form factor SSD solutions. It is a full size PCIe 3.0 x16 solution that houses four NVMe SSDs, each of which is situated within a PCIe 3.0 x4 framework and can be raided. In our testing, our best performance seems to occur with OS level RAID 0 and with the Samsung 960 Pro… which of course would be a given as the Samsung has higher specifications. Still… is 11GB/s a second the fastest yet?

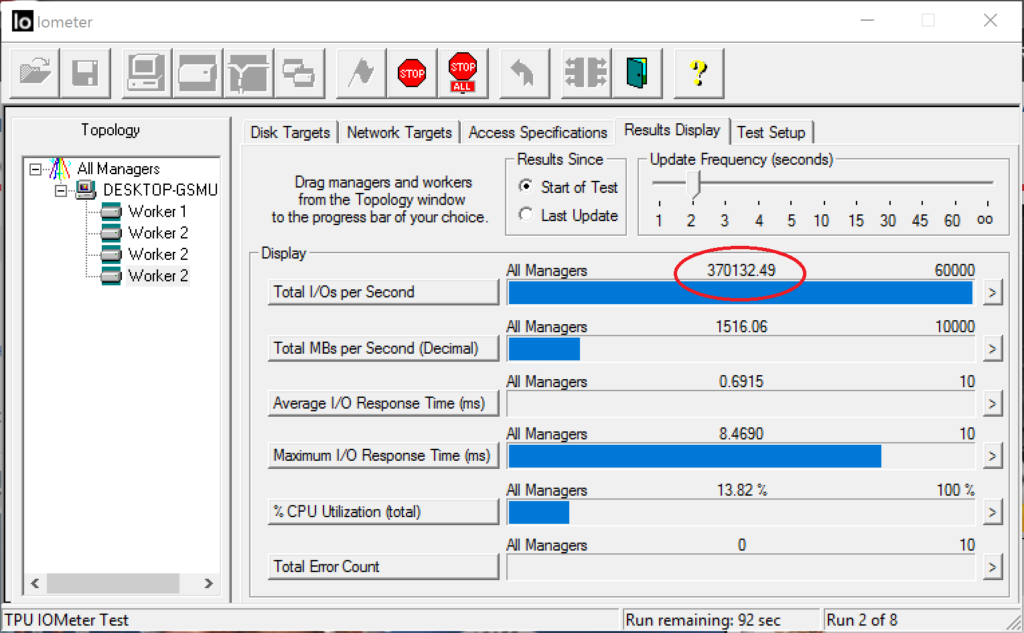

It is a bit of a give and take here with respect to performance. While we did get some amazing performance results in our testing, the test program itself displayed absolutely no consistency between tests, but to say that results were consistently high in one form or another. For instance, we received a result of 1 million write IOPS in AS SSD but could not duplicate it anywhere else. In fact, we couldn’t come anywhere near that in Iometer and this was our result which is more of what we have expected for this drive:

Conversely, we thought we might have better read IOPS so we ventured into Iometer once again. I used JBOD and threw four workers onto each drive separately and look at what we received:

Now that is 1.5 million IOPS and it is entirely believable, considering how we got there. We have to remember though that this was using the drives separately in Iometer and it brings up a very important thought. It is actually the same with all SSDs. Know what you need the storage device to do and only then seek out the proper solution. Regardless… amazing result that I never expected. This is the SSD7101A-1 being put through the paces in one of our systems:

Next up… pricing and availability. I just checked Amazon and it wasn’t there. I did find it elsewhere for as low as $400 which shocked the heck out of me. That is an amazing price! To think that you can put together a device that would really be key in high end media manipulation for under $1000 is absolutely incredible. It carries a 1 year warranty and you have to wonder what kind of performance you could squeeze out of two of these in RAID0. The problem of course is understanding the PCIe device configuration of your system.

FINAL THOUGHTS

The HighPoint SSD7101A-1 NVMe SSD RAID controller is capable of RAID throughput over and above 10GB/s as well as 1.5 million IOPS. We pulled off that throughput on two separate brands of SSDs, understanding that the only two validated SSDs for this controller right now are the Samsung 960 Pro and Evo. That’s right; the RD400 is not recognized as an approved SSD… but it was just as amazing in its performance. This RAID controller is a monster and something just about every enthusiast should consider. Now creating a RAID boot device…we are working on. I am hoping it was just a glitch in the machine and will be having a chat with HighPoint about that. I truly figured their software would enable that as the RAID is created on the device, via the software. In any case, thanks much to HighPoint, Samsung and Toshiba for their support and… Innovation Award!

Check for the HighPoint SSD7101A-1 at Amazon

Check out pricing on the Samsung 960 Pro M.2 SSD at Amazon.

Check out pricing of the Toshiba RD400 NVMe SSD at Amazon.

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

I wonder if a Threadripper build could run this with dual GPUs?

Waiting for it to arrive. I am told it can…and then some.

I heard only intel boards are listed as supported at their site?

Wow, I look forward to your piece on it.

Comparing this w/ the native TR raid drivers coming soon.

I nurse a hope that amdS lanes are cleaner than intels. Certainly there are a lot of ifs and buts attached to pre x299 mobo pcie3 x16 slots.

The normal spec for TR is 3x native m.2 ports on the mobo, so 3x ssd in raid on TR is very democratic – available to most wise TR buyers if u boot on sata ssd – no expensive controller needed.

From raid benches i have seen, 3x raid 0 seems a sweet spot in the diminishing returns table.

No it wont. TR is limited to 2x 16 lane cards sadly.

Hey! Great review. So this unit is suppose to be bootable? Just possible issues with your particular motherboard?

Nice review! Could also review the native nvme raid on threadripper with the drivers that will be released on 25th of September. Amd claims that it supports up to 7 nvme drives on raid.

I dont recall details as of no use to me, but i did research bootable raid on the net, & the upshot is u can boot on anything, there is just an arcane procedure to set it up – like a clean install of win from a flash stick onto a suitably pre-configured array ~.

I gather from comments the article hasnt clarified that only intels are listed in the supported moboS, or so I hear.

It seems odd, as its perfect for lane rich TR/epyc, bar the high cost & possibly superfluous raid capabilities (tr & epyc can perform raid unaided by controller)

The usual frustrating refrain re raid nvme is “its already so fast, u wont notice the difference” – true, but your system will, and u will notice that.

To me the exciting concept is virtual memory. For the first time ever, the boundaries between ram and storage are getting blurred by such arrays.

For discerning parts of this virtualising process (as we see here, iops are no better on raid), intelligent 8GB/s paging/juggling of big slabs of contiguous data from such storage arrays up and down the speed hierarchy of the cache pool, could be extremely useful.

As a sign of things to come, we see the radeon vega pro ssg, with 2TB of raid nvme attached tot the gpu card – a snip at $7kus. The onboard nvme raid can be used as a cache extender for the gpu onboard 16GB hbm2 ram.

All vega cards can use hbcc to do same cache extending, only via the more handicapped pcie3 bus to system resources like these arrays or spare system memory.

This auto expand and contract of cache at system level is a revolution for coders. They can simply assume ~unlimited gpu cache, and let the system handle the details.

AMD “slides” on hbcc claim a bump in gpu ram of 2-3x can be simulated, un-noticed by users, by adding spare system ram to the hbcc vega gpu cache pool.

I posted thoughts on what boil down to – add a small fast non boot array to your system, and soon a use will come along & u will be glad u did.

So here is what i figure for a cheap rig to test the water.

Get a TR w/ 3x nvme ports. preserve these precious native raid supported ports for your raid and find another way of booting.

factors are:

the drives are dear. u have been generous with ports/lanes, nand improves fast, u dont need space (multiples of existing ram are nothing vs ssd array capacities), only speed,…

If the progression in specs from the 950 pro to the now oldish 960 pro are any guide, we will soon see nvmeS which saturate their allotted 4GB/s pcie3 bandwidth – in both read and write.

at that point, you can get the most from your 3x array, so its tempting to go cheap til then.

while the samsung 960 pro 500GB ssd is the current king for this, 3x ~$370us? is a lot, and still well shy of the magic 4GB/s at ~3.2 GB/s SeqRead & 2.2GB/s SW.

The samsung evo retail range are good, slower and cheaper, and also come in much cheaper 256GB size, and may stripe to some very respectable speeds, yet keep outlays minimal until the really cool ssdS arrive, at which point, you have 3 x very salable 256GB ssd ~desktop boot drives.

then we come to the matter of oem versions of these same drives, which are available out there even cheaper.

Really cheap? There are even such panasonic 128GB nvme ssdS – dunno, some say they lack sufficient cache, oth, maybe raid 0 has the effect of tripling the apparent cache?

NB I am not suggesting u rely on this storage. I am suggesting it as a scratch workspace for temp files and virtual page files as above. Its like volatile dram, if suddenly lost, the job can be recovered. This is the coding norm for such files. Debate on possible failure rates (~anachronism from hdd days imo) is beside my point.

So yeah,w/ a 3 x nvme port TR w/16GB & even a future 4GB VEGA card, the above array could realistically provide ~12GB/s read and write in a year or so, 9 & 5.25 GB/s now w/ 960 pro, & for not much money (compared to large ram certainly), 256GB panasonics should yield 7GB & 4.5GB/s.

(remember sata ssdS are ~500MB/s & HDD is about 120MB/s when they finally find the file)

In perspective, most agree,few games suffer from using 8 lanes vs 16 lanes for demanding gaming, and 8 lanes equates to 8GB/s, so an 8GB array is like system memory bandwidth to a gpu card.

Apart from the drives, it wont cost a nickel extra on your planned TR, maybe even a smaller spend on “precautionary” memory.

There are those who slag off bios raid & some who praise/slag off software raid. Dunno. Its hard to trust anything when so many attitudes seem remnants of another era.

A curious one is some round on windows software raid for the huge? overheads it introduces, which begs the question, does it matter when we now we all 🙂 have cores doing nothing.

It puzzles me that there is a huge demand for such cards as these, but they almost dont exist. You can get a pcie3 x4 lane adaptor for a single nvme ssd cheap as chips ($15) anywhere, but ~nothing bigger exists i can find, even lacking raid.

Why is it so simple to make a dumb 1x adaptor, and so hard to make a dumb 2x (8 pcie3 lane) or 4x (16 lane) adaptor? Thats all thats needed for AMDs bios raid or MS software raid.

fyi

comparison results on an x99? intel mobo, 6x 960 pro 1GB invarious raid arrays

https://imgur.com/a/a68Sd

There always seem to be some glitches in all of them, but patterns emerge, raw numbers aside.

The table gives a good idea of the sort of multiple in raw speed that can be expected i the eco system can handle these radical bandwidths.

one pattern is there seems to be a big drop of in read gains for the fourth drive in an array – ?, maybe its just me?

another pattern that stands out, is write speeds, as alluded to in the article.

In some apps, fast read may be meaningless if writes are slow, and writes lag seriously on single ssdS.

The numbers show tho, that writes profit far more from striping than read speeds, and it may pay a user to keep adding drives to the array, even when write gains are dropping off.

another curiosity I noticed elsewhere, was they tested the arrays 4x 960 pro individually, and the variance was quite extreme – ~2800MB/s vs 3200MB/s read? Its very damaging to add sub par drives to an array, asthe lowest speed drive dictates the pace for the whole array.

This card is passe now on the TR4 platform as you get FREE RAID from AMD:

Der8auer hits 28GB/s on TR4 in I/O meter and 8xNVMe Samsung 960 Pro!

https://www.youtube.com/watch?v=9CoAyjzJWfw

ASUS Hyper M.2 x16 Card (less than 100$)

https://www.komplett.se/product/945185/datorutrustning/lagring/haarddisk/ssd-pci-express/asus-hyper-m2-x16-card

ASUS X399 Rog Zenith

Change the PCI slot into 4x4x4x4x mode from original 16x mode.

1900X can push 64 pci-lanes.

Done.

As someone who dual boots different OS that don’t necessarily support motherboard RAID, that’s really the target market left for the PCIe RAID controllers.

can you use it with just one or two m2 ssd in normal or raid configuration?

Yes you can.