One can improve the performance of small files by up to 50X which for certain types of setups can reap HUGE results. Webservers, File Servers, and OLTP (Online Transaction Processing) will benefit tremendously. There is definitely a large group of users that would be very interested in combining the positive aspects of both solutions (HDD/SSD) into one cohesive unit. How is this achieved with CacheCade?

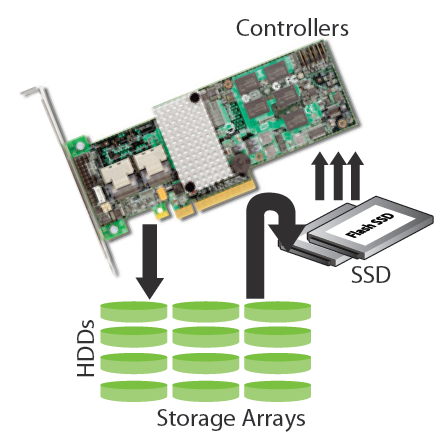

This graphic is great in that it shows the most basic picture of what is being accomplished.

The RAID controller is on top and controls all data coming into and out of the storage array. The data is written directly to the HDDs, when needed. When that data is recalled, it comes right back out of the HDD which is the normal scenario.

Using the SSDs as a layer of cache, you are essentially placing them with the HDD array and the controller.

The benefit of reading off of the SSD is that you are going to receive exponentially faster speed of access from all of the data that is stored there.

The question presented is exactly how the data is placed on the SSDs and, more importantly, how do we decide which data is placed on the drives?

Automation is extremely important, as constantly monitoring and adjusting the cached data manually would be a ridiculous prospect. LSI has come up with a software algorithm that basically detects which data is most commonly accessed. The data isn’t treated as individual files, but as data ‘hot spots’. The most accessed data will be tagged as “Hot” data and copied to the flash layer of storage. This also helps with the longevity of the SSD by moving only the hot application data to the SSDs, eliminating unnecessary writing.

When that Hot data is accessed, it will be read off of the SSD to increase the performance.

The data will remain on the SSD until it is decided that the data needs to be ‘retired’. The only way that data is retired is that:

- There are files that become more relevant, or more commonly accessed, than the file being retired. This allows the system to constantly optimize the storage. The Hot data will change depending upon the currently “popular” data.

- There is a need for the space occupied by that data. So, for instance, if some other data becomes more recently accessed, but can be moved to the SSD and there is no need for the space that the ‘old’ data requires, it will not be removed.

The effectiveness of the caching is going to increase over time as the algorithms more accurately gauge the data that needs to be cached, and the cache is populated with the data. It really doesn’t matter what process is requesting the information, so multiple applications can benefit from the caching simultaneously.

NEXT: Maximizing Benefits

Page 1- Introduction

Page 2- Nuts and Bolts

Page 3- Maximizing Benefits

Page 4- MLC vs SLC

Page 5- Test Bench and Protocol

Page 6- Single Zone Read

Page 7- Hot-Warm-Cool-Cold

Page 8- Conclusion

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |